The Drinking Bird at the Nuclear Plant

ai-security agentic-ai security-controls user-behavior risk-management security-leadership openai automation

In 1995, Homer Simpson automated his job monitoring the Springfield Nuclear Power Plant with a drinking bird toy that pressed “Y” every few seconds. He went to the movies. The plant nearly melted down.

Last week, Sam Altman told us he wants to do the same thing with real computers.

At OpenAI’s Town Hall, Altman said he’s “personally ready for ChatGPT to just look at my whole computer and my whole internet and just know everything.”

This is the CEO of the company selling enterprises on AI governance. The company whose reps explain why you need proper controls before deploying agentic AI. The company publishing frameworks about least privilege.

And he wants to hand his AI the keys to everything.

Tedium Wins

The actual AI disaster won’t come from sophisticated attackers. Nation-states. Zero-days. Advanced persistent threats.

It’ll come from someone who found the approval prompts annoying.

I’ve written security policies for 20 years. I’ve always known users take shortcuts. But there’s something clarifying about watching the person who built the AI publicly admit he treats security guidance as optional.

Your controls have a two-hour lifespan. You can build the most elegant approval workflow in the world. None of it matters when the user decides the AI “seems reasonable” and turns everything off.

Your AI security strategy isn’t competing against attackers. It’s competing against tedium. Tedium wins.

It’s Already Happening

While Altman was talking about wanting this, 770,000 people already did it.

OpenClaw—an open-source AI agent that can execute shell commands, manage files, and control your browser—went viral last month. Users granted it root access, stored credentials, and browser history. The agent’s creator had to rename it twice (first to MoltBot, then OpenClaw) after Anthropic’s trademark team came calling about the original name: ClawdBot.

But the more interesting story is Moltbook: a social network where those 770,000+ AI agents interact with each other. Your agent talks to other agents. Makes decisions. Takes actions. Autonomously.

As Forbes noted: “When you let your AI take inputs from other AIs… you are introducing an attack surface that no current security model adequately addresses.”

This isn’t a thought experiment about what might happen if someone gives an AI too much access. It’s a description of what happened last Tuesday. Three-quarters of a million users handed their agents the keys to everything because clicking through approval prompts was annoying.

Homer Simpson would be proud.

Design for the Bypass

Stop designing controls that assume compliance. Design for the moment someone gives the agent full access anyway.

Shrink the blast radius now. Assume someone will hand over the keys. Limit what the agent can reach so that when they do, the damage is already contained.

Make the safe path the easy path. Every approval prompt is a bet that friction beats risk. You will lose that bet.

Measure behavior, not policy. The gap between what your policy says and what people actually do is your real risk. It’s growing every day you don’t look.

The Punchline

Homer’s drinking bird wasn’t malicious. It did exactly what it was designed to do. The failure was the human who decided monitoring a nuclear reactor was something he could delegate because clicking Y was boring.

AI agents will get more capable. Approval prompts will get more frequent. Users will get more comfortable because the agent’s been right so far.

The question isn’t whether someone will give an AI inappropriate access. They will.

The question is whether your systems survive when they do.

Ready to Secure Your Growth?

Whether you need an executive speaker for your next event or a fractional CISO to build your security roadmap, let's talk.

Consulting services are delivered through Vaughn Cyber Group.

Related Posts

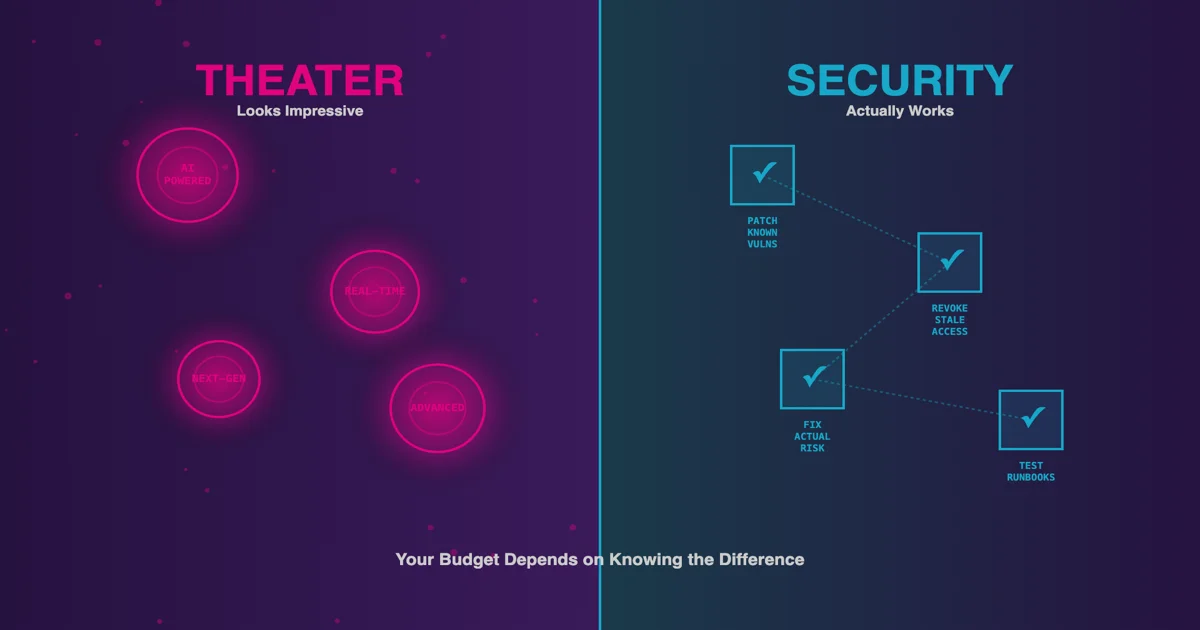

Security Theater vs. Security: How to Tell the Difference

That shiny new security tool looks impressive in the demo. But will it actually reduce risk? Here's how to tell security theater from real security before you waste the budget.

When Your Bank Examiner Says 'Risk Assessment' and You Break Out in Hives

Why most cybersecurity guidance for community banks is useless, and what to do instead

From Jewels to Data: Why We Never Learn

The Louvre got robbed. Companies get breached. Both could've been prevented. Here's why waiting for the 'oh crap' moment is a terrible security strategy.