Automating Ourselves Into a Cybersecurity Crisis

cybersecurity workforce-development ai-automation talent-pipeline security-leadership apprenticeships incident-response soc-operations

May 10th, 2040. Your cloud infrastructure is under attack. The AI sees nothing wrong. Every alert looks normal.

Your analysts stare at the dashboards, waiting for the AI’s recommendation — because they’ve never learned how to investigate without it. The last generation of “manual” investigators retired years ago. You didn’t train another one.

And now, your infrastructure is compromised end-to-end.

The Ladder We’re Breaking

In cybersecurity, we’re dismantling the bottom rungs of the career ladder. Entry-level SOC and incident response roles are being automated away.

It solves a short-term resourcing problem — but it also cuts off the path for new talent to build investigative instincts.

We’ve seen this movie before.

When the last COBOL programmers retired, entire industries scrambled to keep ancient mainframes alive. That crisis only stopped business. A cybersecurity talent collapse could stop everything.

Why This Matters Now

- Cybersecurity workforce gap: Over 3.5 million unfilled jobs worldwide (ISC², 2024)

- SOC entry-level roles shrinking: Automation removes the very positions where analysts learn foundational skills

- AI dependence: Without manual investigative experience, the next generation won’t be able to validate, question, or override AI systems when it matters most

The Path Forward

We can fix this — but only if we act before the “ladder” is gone.

1. Build true apprenticeships.

Pair new grads with seasoned analysts for 18–24 months. Let them investigate real incidents with mentorship, not just watch from the sidelines.

2. Bring back rotational programs.

Partner with universities for co-ops and residencies. Rotate students through SOC ops, threat hunting, and vulnerability management.

3. Fix cybersecurity degree programs.

Too many programs skip deep technical foundations in favor of frameworks and compliance checklists. Graduates need to understand how systems actually work — networks, operating systems, code — not just tool certifications that will be obsolete before they graduate.

4. Make AI a teacher, not just a tool.

Design AI to explain its reasoning, show the evidence trail, and ask the analyst to verify or challenge the conclusion.

5. Protect the “manual override” culture. Every automated action should be traceable, explainable, and reversible by a human. This is not optional — it’s resilience.

Planning your cybersecurity career? My Career GPS Toolkit includes tools for evaluating job opportunities, identifying career red flags, and building a strategic action plan for career transitions.

The Choice Is Ours

The choice we make today - automate everything or automate smartly - will determine whether we have a cybersecurity workforce in 2045, or just a bunch of people who know how to restart AI systems when they inevitably fail us.

Which future are you building toward?

Ready to Secure Your Growth?

Whether you need an executive speaker for your next event or a fractional CISO to build your security roadmap, let's talk.

Consulting services are delivered through Vaughn Cyber Group.

Related Posts

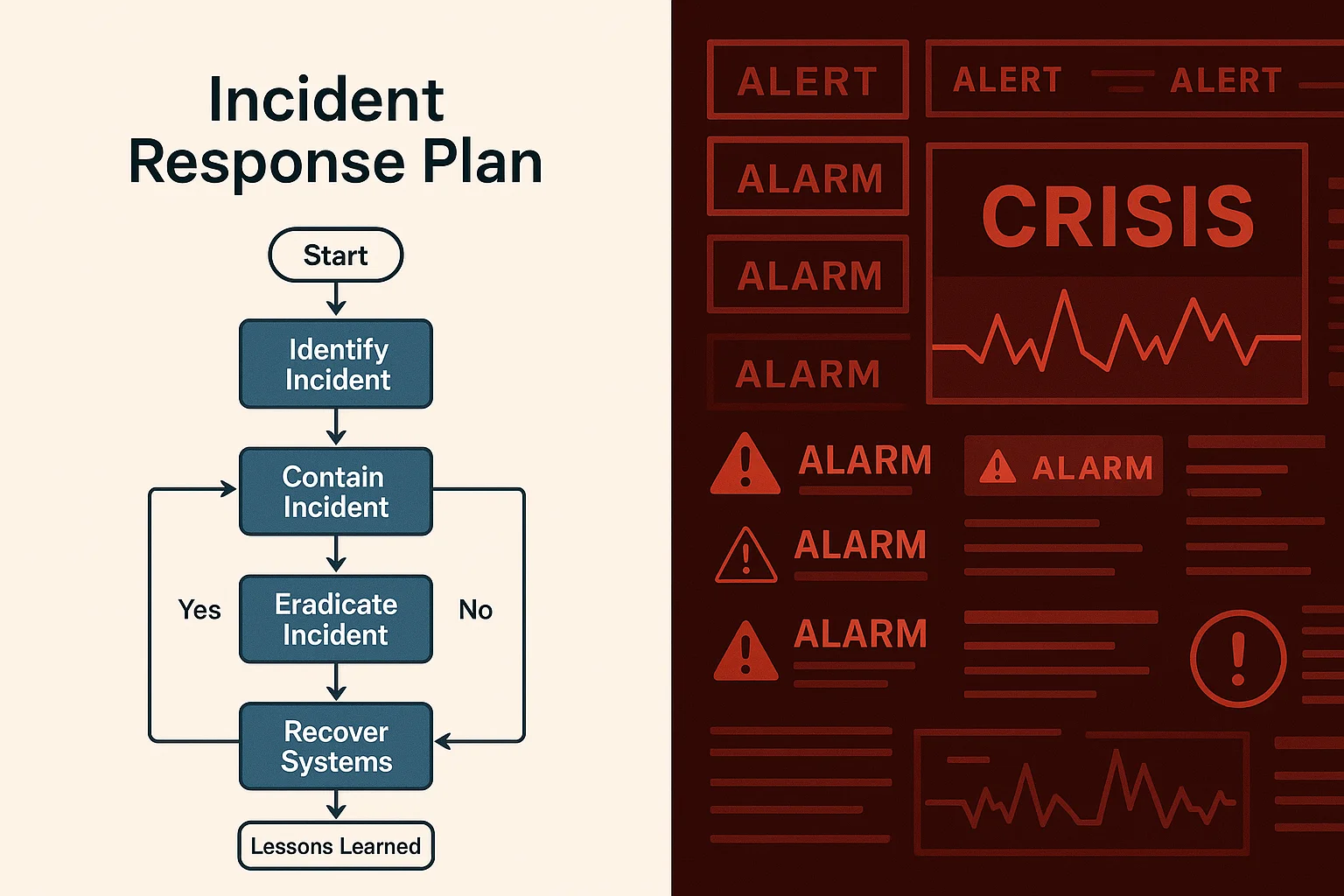

When Perfect Plans Meet Imperfect Reality

Sometimes the consequences of IR plan failure aren't just about downtime or data. Sometimes they're about life and death.

The Question That Made Everyone in the Room Go Silent

I asked one simple question about incident response plans. The silence that followed told me everything I needed to know.

Feats of Endurance and Stupidity: What Running in Circles Teaches Us About Cybersecurity

What ultramarathon running teaches us about incident response and cybersecurity resilience. Lessons from a CISO on training for chaos, mental endurance, and why preparation beats reaction.